What does DNA have to do with web application testing? A research team in Luxembourg is using bioinformatics techniques to detect hidden user behaviour patterns and prevent problems before they appear.

Most people expect the web applications they use every day to work without trouble. They click a button or search for a product and assume the page will load as it should. When something goes wrong, a link sends them in circles, or a form refuses to submit. It feels like just a small glitch. But for the teams behind those sites, even minor disruptions can frustrate users and, in some cases, lead to financial losses.

The reason these problems don’t happen more often is because of the quiet, careful work of software testers. The process starts long before a site goes live, as testers replay user journeys over and over to find where something might slow down or break. As website applications grow more complex, the number of possible user interactions grows too, and so does the challenge of keeping everything running smoothly.

Why user behaviour is so hard to understand

Behind the scenes, web application testers often sort through huge datasets recording every click or pause people make while navigating a site. Finding useful patterns in this mass of behaviour is difficult. Even trained reviewers can miss details, especially when thousands of users move through pages in completely different ways.

That’s because even the most carefully designed website application must contend with one stubborn truth: people rarely behave in predictable ways online. Some skim the page, others explore every corner, and some change direction halfway through a task. A simple action, like updating an address, can be completed in dozens of different ways. For testers, this makes it hard to anticipate how real users will actually move through a site.

Modern website applications track thousands of journeys each day. These logs of clicks, scrolls, and pauses pile up quickly, leaving testers with a volume of data too large to analyse manually. Patterns hide within noise. While two users may follow completely different paths to reach the same page, both paths still offer clues about how well the site supports everyday tasks.

Because behaviour varies so much, testers can struggle to decide which patterns matter most. A journey that seems unusual may point to a real usability issue, or it may simply reflect a user’s personal habits. Distinguishing between the two is one of the ongoing challenges in software testing.

A joint effort to automate pattern detection

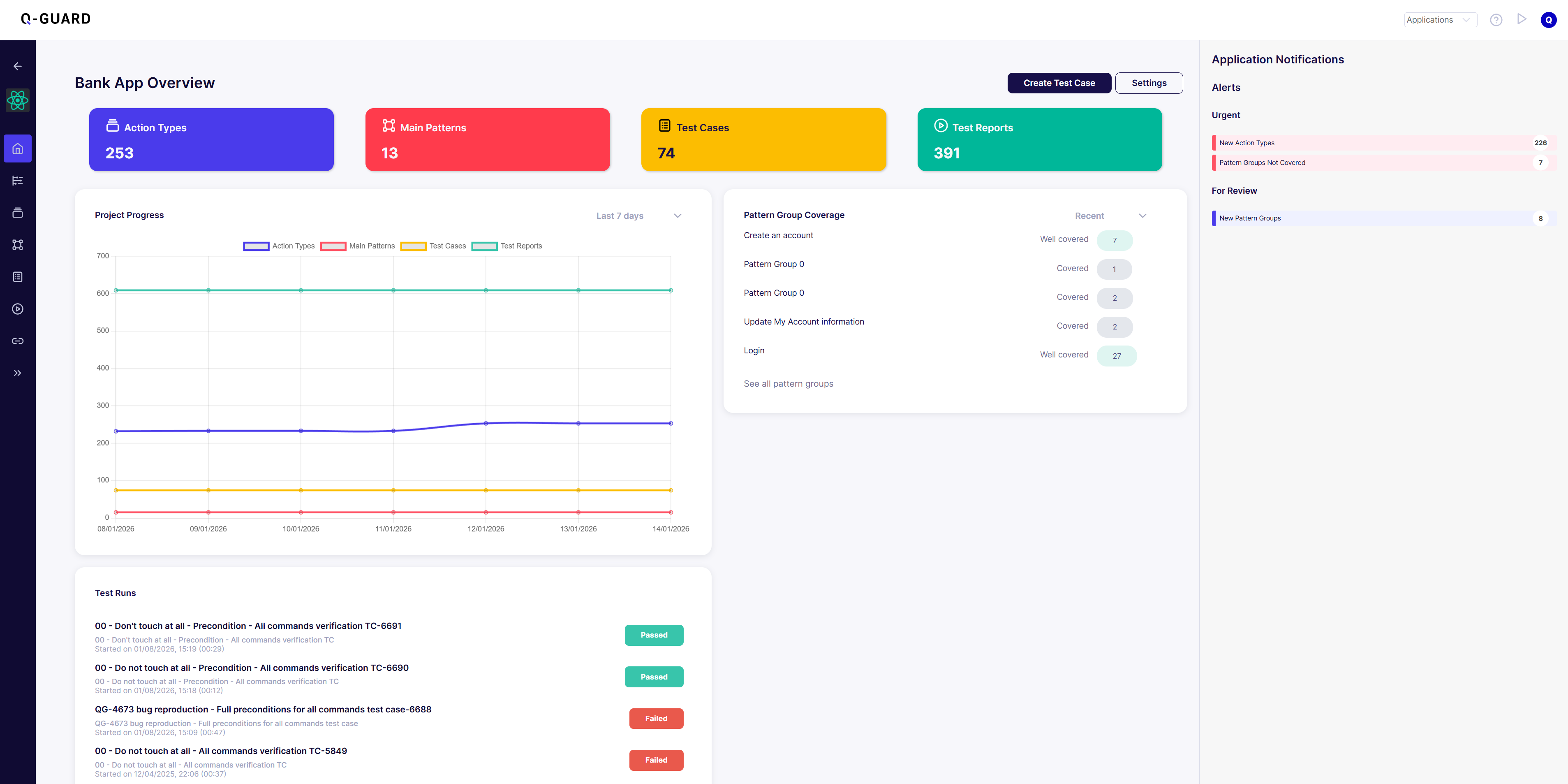

Q-guard, a testing tool developed by the Luxembourg-based company Q-LEAP, was created to support teams dealing with this growing complexity. It records how users interact with a site so testers can review the data and spot recurring behaviours. Until recently, this still required careful manual analysis. Some patterns were clear, but subtle variations in user paths often went unnoticed.

In 2023, Q-LEAP partnered with SnT’s Software Verification and Validation (SVV) group to expand the tool’s capabilities. The collaboration brought together industry experience from Q-LEAP and academic expertise from researchers Prof. Domenico Bianculli, Dr. Seung Yeob Shin and Mehdi Salemi Mottaghi. Their shared goal was to automate the search for patterns without losing accuracy.

“Q-Guard is a semi-automated solution that helps testers record user interactions, which are then reviewed for patterns,” Shin says. The team wanted to go further by teaching the software tool to automatically detect patterns on its own, even when user journeys varied.

Screenshot of Q-Guard in action

Working side by side, the team reviewed existing methods in software testing and began exploring whether approaches from other fields could help. This search led them somewhere unexpected: genetics.

What genetics has to do with software testing

When the team began looking for new ideas, they didn’t expect to find inspiration in bioinformatics. But researchers in that field face a similar challenge: they must search through massive DNA sequences to find recurring patterns, even when variations appear. The logic behind those searches caught their attention.

One tool in genetics, known as BLAST, compares DNA sequences to identify similarities linked to biological functions. It doesn’t need a perfect match. Instead, it recognises patterns that are close enough to matter. This flexibility made the researchers wonder whether the same principle could help make sense of unpredictable user journeys on web applications.

If DNA analysis can detect meaningful variations in genetic sequences, they reasoned, perhaps a testing tool could detect meaningful variations in user behaviour. That idea became the foundation of a new approach. The team began adapting the principles behind BLAST to software testing, shaping a method that recognises familiar user paths even when the steps differ.

The new method focuses on the underlying structure of a journey instead of the exact steps. It compares each interaction sequence to a library of known patterns, checking for similarities even when click paths aren’t identical. This makes it possible to group variations of the same behaviour, helping testers see how people actually use a site.

Essentially, this means Q-guard can highlight sequences that appear frequently, draw attention to unusual ones, and flag areas where users struggle. If many people pause or backtrack on a particular page, the system can alert testers long before complaints reach support teams.

This shift from manual review to automated pattern recognition doesn’t replace human judgement, but strengthens it. Testers can spend less time sorting through raw logs and more time interpreting what the patterns reveal about user needs.

Outperforming existing solutions

After refining their method, the researchers tested it against existing tools. Their approach reached a 97 percent accuracy rate in identifying user behaviour patterns, even when the journeys varied. This means their approach recognised related patterns more reliably than current state-of-the-art technologies.

“We proposed this new technique to Q-LEAP, and it is now being adapted to their application domain,” Shin says. The accuracy score suggests that the method can handle the messy reality of real-world user data, giving testers more confidence in the insights it provides.

With clearer insight into user behaviour, website application teams can spot issues earlier. Developers can see which pages support smooth navigation and which ones consistently cause hesitation. Designers gain evidence-based guidance for refining site layouts, helping them create pages that match real user habits.

For the public, the impact is subtle but meaningful. Tasks take less time, pages feel more intuitive, and small frustrations like loops, dead ends, or confusing menus—begin to fade. Most people never think about the tools behind a reliable website application, but they feel the difference in every smooth interaction.

For industry teams, the long-term goal is simple: build tools that make testing faster, more accurate, and easier to manage. As digital systems expand, automated support will become essential.

Now that the new method is integrated into Q-guard, the collaboration is entering its next phase. The team is exploring how the approach could scale to larger datasets and more complex website applications, where user paths multiply into thousands of variations. They also see potential applications in other domains that rely on discovering patterns in large sets of interactions.