What is Artificial Intelligence (AI)?

Did you know that the term “Artificial Intelligence” was coined 70 years ago?

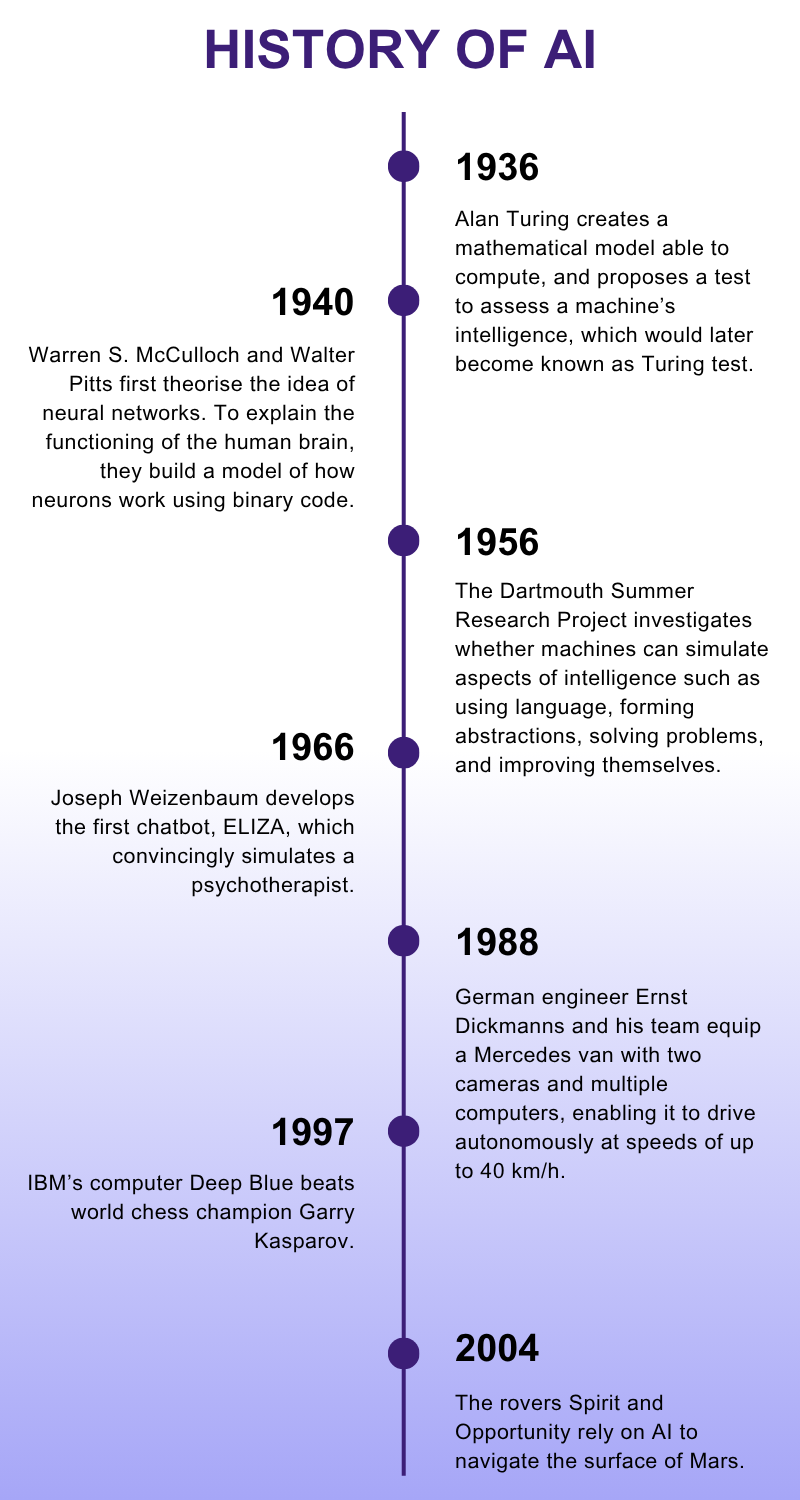

It was first mentioned in a 1955 research project proposal by scientists John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester. This proposal led to the 1956 Dartmouth workshop, which is widely regarded as the founding event of AI. The four scientists would go on to become key figures in the computing world.

Today, AI is an umbrella term encompassing various technologies that simulate tasks associated with human intelligence. This includes, for instance, problem solving and information processing.

AI technologies can perform tasks like analysing human language, enabling computer vision, as well as advancing robotics, industrial automation and self-driving vehicles

AI: A timeline

The origins of AI can be traced back long before the existence of computers. Conceptually, they are rooted in the idea that all thought can be created by combining basic units in different ways. This underlying logic was formalised in the 19th century by mathematician George Boole. Boolean algebra provided the logical basis to later design the electronic circuits of computers and manage the information within them by operating on binary data.

On the hardware side, in the early 19th century, the mechanical calculators developed by Charles Babbage and Ada Lovelace, and later built by Konrad Zuse, first exemplified the structure of computers. They had storage, a processing unit and relied on algorithms to perform mathematical operations.

Turing’s big idea

In 1936, to solve an important problem in logic, mathematician Alan Turing devised a mathematical model – the Turing machine – able to compute. “It is possible to invent a single machine which can be used to compute any computable sequence,” he wrote. His research provided the theoretical foundation for programming languages, and introduced concepts such as input/output, memory usage and data processing. In fact, the Turing machine became the conceptual basis for the computers of today.

It was also Turing who wrote, in 1950, the seminal paper “Computing Machinery and Intelligence”. In it, he set out to examine whether machines can think, and proposed a test for their intelligence, today known as the Turing test. With remarkable foresight, he predicted that in 50 years’ time there would be computers able to simulate human behaviour in ways that would look indistinguishable from those of a human.

The 1955 proposal for the Dartmouth conference by McCarthy, Minsky, Shannon and Rochester and their later research expanded on the core idea behind Turing’s test. The scientists explored the idea that computers could simulate any aspect of intelligence, as long as it can be precisely described. These aspects included language processing, abstraction, problem-solving and self-improvement.

Neurons, networks, and the first chatbot

In parallel, in the 1940s neuroscientist Warren S. McCulloch and mathematician Walter Pitts first theorised the idea of neural networks. To explain the functioning of the human brain, they built a model of how neurons work using binary code. Based on their research, two decades later, Frank Rosenblatt built the first artificial neural network, the Perceptron. It consisted of an algorithm able to take in information and classify it into two possible categories.

About two decades later, in 1966, computer scientist Joseph Weizenbaum developed the first chatbot, ELIZA. Even though it didn’t pass the Turing test, ELIZA was able to converse with humans and convincingly simulate a psychotherapist. The chatbot caused a stir, starting a debate in society about the relationship between humans and computers, a topic that is very much current today.

From the 1970s to the mid-1980s, interest in AI waned, as the hype created by the early successes failed to meet expectations. However, the quick advancements in computer science that characterised the 1990s, including the advent of the internet, reignited interest in artificial intelligence, and a lot of resources went into research and development.

This time, results came quickly.

In 1997, IBM’s computer Deep Blue won against world chess champion Garry Kasparov, and for the first time, the world had to deal with the symbolic victory of a computer over a human brain. Shortly afterwards, web crawlers started using AI to scan the internet for information. And in 2004 the rovers Spirit and Opportunity relied on AI to navigate the surface of Mars.

AI applications such as statistical machine translation, speech recognition, self-driving cars and facial recognition techniques characterised the 2000s and 2010s. Today, AI can predict protein structures, participate in drug development, code, and create videos, among countless applications.

How does it work?

AI consists of sets of rules that perform specific computations. These include finding patterns, recognising objects, and generating predictions. Their distinctive feature is their capacity to adapt their behaviour to improve their performance. They can learn from new data, adjust their parameters, and create new sets of rules.

AI encompasses a wide range of technologies that work in different ways. Here are some of its key components:

Machine learning consists of groups of algorithms that have been trained to recognise patterns and make decisions. The more information they process, the more these systems learn from their actions, improving over time at tasks like recognising images or predicting outcomes.

Deep learning is a type of machine learning characterised by the use of complex neural networks. Its multilayered structure and higher degree of independence enable it to simulate the decision-making of the human brain.

A type of AI that relies on algorithms able to create content such as images, text, video and audio. These algorithms use deep learning to learn patterns from datasets, then create new content that follows these patterns.

LLMs are a subset of Gen AI, and the underlying foundation of AI chatbots. The way they work is to combine words based on their likelihood to appear next in a certain sequence. This knowledge is based on a large amount of categorised textual data that they have been fed in advance.

Networks of artificial neurons organised in layers. An artificial neuron (also called node) is a mathematical function that mimics the functioning of a neuron, processing an input and delivering an output. These nodes adapt their functions over time to improve their performance. The different layers of a neural network perform different functions, such as processing input, extracting key information, and producing an output.

Why it matters: impact and applications

From email assistants and self-driving taxis to predictive maintenance in factories, AI technologies are transforming our daily lives and industries in countless ways.

NLP empowers computers to “understand” and produce natural language. It relies on statistical modelling and deep learning, constantly predicting which words should come next in specific contexts based on its training data.

AI-powered language systems can summarize long email chains, translate between languages instantly, and even generate creative content, making communication more efficient across global boundaries.

AI allows IT systems to analyse and interpret visual data for many purposes. From the facial recognition on your phone to monitoring factory equipment, computer vision has applications in security, manufacturing, and everyday technology.

Advanced systems can now detect potential security threats, identify product defects on assembly lines, and help autonomous vehicles navigate complex environments.

AI has crucial applications in manufacturing and production. These include predictive maintenance to forecast and prevent equipment failures, quality control through computer vision systems, supply chain optimisation, and creating digital twins of complex systems.

Computer vision and machine learning also allow mobile robots and robotic arms to carry out a large range of tasks. Mobile robots often perform tasks that are too dangerous for humans, such as inspecting hazardous areas. Robotic arms are used to perform fast or repetitive actions in industrial settings, such as loading and unloading heavy items, assembling and sorting products.

Autonomous vehicles are powered by several AI technologies working together in concert. Computer vision processes video data, while several other sensors feed real-time data to a decision-making system. Predictive modelling helps these vehicles to forecast the actions of other vehicles and pedestrians, enabling self-driving cars to avoid others.

AI, today: limitations and opportunities

Current adoption

AI technology has rapidly evolved from theoretical concepts to practical tools that impact our daily lives. As of 2025, AI has reached a critical point in its development and adoption across society.

Many businesses and institutions appreciate the benefits of using AI: increased efficiency and productivity, improved processes, and better forecasts can quickly add up to billions in savings. AI can reduce human error, avoiding costly mistakes. Or it can analyse large amounts of unstructured data to extract meaningful information and save time. By addressing repetitive and tedious tasks, AI frees up time for employees to focus on more complex issues. In fact, according to a 2023 McKinsey study, half of today’s work activities could be automated between 2030 and 2060.

According to 2025 Eurostat data, the sectors that use AI the most are information and communication, followed by professional and scientific activities, utilities, real estate, and administrative services. This widespread adoption shows how AI has become essential across different industries.

Challenges and limitations

However, current AI systems still have significant limitations. Large language models are not reliable sources of information. They make mistakes, known as hallucinations, generating content that is factually incorrect, biased, and potentially harmful. While researchers understand the causes of this erratic behaviour, most big players in today’s AI space operate so-called black-box AI systems. That means that there is no transparency on how these systems work, and no way to pinpoint bias or weak points to address them.

And it’s not just LLMs, either. A police face recognition software failed at recognising people of colour, leading to racial profiling. And in 2021, the Dutch government resigned over a scandal involving an AI algorithm that wrongly accused 26,000 parents of childcare benefit fraud.

Moving toward responsible AI

‟ Developing trustworthy AI is a challenge that both the scientific and the industrial world need to tackle with the greatest care to achieve safe, fair, and sustainable growth.”

Research scientist

In response to these issues, there’s growing demand for “trustworthy AI” – systems that are transparent, fair, and reliable. The European Union has taken the lead with its Artificial Intelligence Act, the world’s first comprehensive AI law, designed to ensure AI systems are “safe, transparent, traceable, non-discriminatory and environmentally friendly”.

At the same time, AI should be used conscientiously, given its impact on the environment. For instance, generating one image with AI consumes as much energy as fully charging your phone. AI has a cost, and society needs to be made aware of it to use it responsibly.

The technology also has an impact on us as humans. Recently, scientists found that excessive reliance on chatbots leads to a decline in cognitive skills, which is especially harmful in younger generations. Increased energy consumption and poor cognitive health represent huge risks for society as a whole and need to be urgently addressed by regulators.

Despite these challenges, AI continues to offer tremendous potential for improving efficiency, reducing costs, and supporting better decision-making. As we move forward, the focus is shifting toward developing AI that is not only powerful but also responsible, sustainable, and aligned with human values.

What’s next: SnT’s research

SnT researchers use a variety of AI technologies to conduct research in Cybersecurity, Autonomous Systems, FinTech, and Space Systems. Here are some of our AI projects:

Detecting Deepfakes with Computer Vision

The Computer Vision, Imaging and Machine Intelligence (CVI2) has been working with Post Luxembourg to detect deepfakes, which are photos and videos manipulated with AI. Deepfakes threaten digitalisation by diminishing confidence in digital solutions as a whole and represent a menace to society when used to spread fake news. The group has recently created a deepfake detection model able to be carried over, even as deepfake technology evolves. Their approach removes the need for constant retraining of the model.

Implementing Predictive Maintenance and waste reduction

The Security Design and Validation (SerVal) research group has been working for years in partnership with Cebi, the Luxembourg-based global producer of electromechanical components. Over the years, the joint research group has implemented open communication protocols and standards between proprietary and vendor lock-in devices on Cebi’s production lines. Later on, with support from the FNR, the joint team designed a decision-making system to optimise maintenance and production processes.

Trustworthy and Responsible AI at SnT

SnT researchers follow the principles of responsible AI in their research projects. Their goal is to develop AI solutions that empower humans, while being safe, transparent, accountable, inclusive, sustainable, and respectful of privacy. In this, they are inspired by the EU Ethics Guidelines for Trustworthy AI.

Optimising sustainable logistics with AI

The Services and Data Management (SEDAN) research group has partnered with Gulliver to develop an AI-based solution for sustainable logistics planning. The project aimed to address the significant emissions from heavy-duty trucking, which contributes 22% of EU road transport emissions. Their solution focused on optimising route planning by analysing delivery requirements, thereby reducing environmental impact and improving efficiency.

Other AI projects at SnT include autonomous vehicles, LLMs for GDPR compliance, federated learning in the financial industry, machine learning for space applications, and many more.