Infrastructure

Centre de Calcul (CDC)

The HPC facilities are hosted within the University’s “Centre de Calcul” (CDC) data center located in the Belval Campus. Established over two floors of ~1000m2 each, the CDC features five server rooms per floor (each of them offering ~100m2 as IT rooms surface). One floor is primarily targeting the hosting of HPC equipment (compute, storage and interconnect). A power generation station supplies the HPC floor with up to 3 MW of electrical power, and 3 MW of cold water at a 12-18°C regime used for traditional Airflow with In-Row cooling. A separate hot water circuit (between 30 and 40°C) allows to implement Direct Liquid Cooling (DLC) solutions as for the Aion supercomputer in two dedicated server rooms.

Our HPC systems

AION

Aion is our latest Eviden/AMD supercomputer, delivered in 2021, which consists of 354 compute nodes (2 CPUs AMD Epyc ROME 7H12 and 256GB of memory per node), totaling 45312 compute cores and 90624 GB RAM, with a peak performance of about 1,9 PetaFLOP/s.

It is based on Eviden Sequana XH2000 racks, which are fully liquid cooled and achieve a very good power usage effectiveness ratio for an optimal sustainability.

IRIS

Iris is a Dell/Intel supercomputer which contains 196 Intel-based compute nodes, with a peak performance of 1,1 PetaFLOP/s. More precisely, Iris features 3 types of computing resources:

- “regular” nodes, Dual Intel Xeon Broadwell or Skylake CPU (28 cores), 128 GB of RAM

- “gpu” nodes, Dual Intel Xeon Skylake CPU (28 cores), 4 Nvidia Tesla V100 SXM2 GPU accelerators (16 or 32 GB), 768 GB RAM

- “bigmem” nodes: Quad-Intel Xeon Skylake CPU (112 cores), 3072 GB RAM

SLICES-LU

The SLICES-LU node / Grid’5000 Luxembourg site, hosts systems for experimental research in all areas of computer science. As part of SLICES and Grid’5000, the University researchers have access to a big set of heterogeneous resources hosted on the other sites abroad. These resources are reconfigurable at the system and network level, the infrastructure as a whole is designed to support experiment reproducibility. As such, a user can obtain administrative rights on the compute nodes and fully customize their environment.

As of 2025, our site hosts 3 types of computing resources:

- “small-gpu” nodes: 7x Dell R760XA, per node: 96 CPU cores, 512 GB RAM, 4x AMD MI210 GPUs (64GB of memory)

- “big-gpu” node: 1x Dell XE9680, 104 CPU cores, 2048 GB RAM, 8x AMD MI300X (192GB of memory)

- “regular” nodes: 16x Intel-based compute nodes (12 CPU cores, 32GB of memory)

Storage

The HPC facility relies on 2 types of Distributed/Parallel File Systems to deliver high-performance data storage:

- IBM Spectrum Scale, a high-performance clustered file system hosting personal directories and research projects data. Our system is composed of two tiers: a fast tier (250 TB) based on fast NVME drives, and a lower tier with a higher capacity (2.75 PB) for project storage based on traditional disk drives.

- Lustre, an open-source, parallel file system dedicated to large, local, parallel storage, meant to store temporary scratch data, features a capacity of 1 PB based of fast SSD and hard disks.

In addition, the following systems complete the storage infrastructure:

- An off-site backup server, based on Dell hardware, with a capacity of 400 TB usable, is hosted in the Disaster Recovery Site of the University and connected directly to our network.

- OneFS, A global lower-performance Dell/EMC Isilon solution used to host project data, and also serve for backup and archival purposes

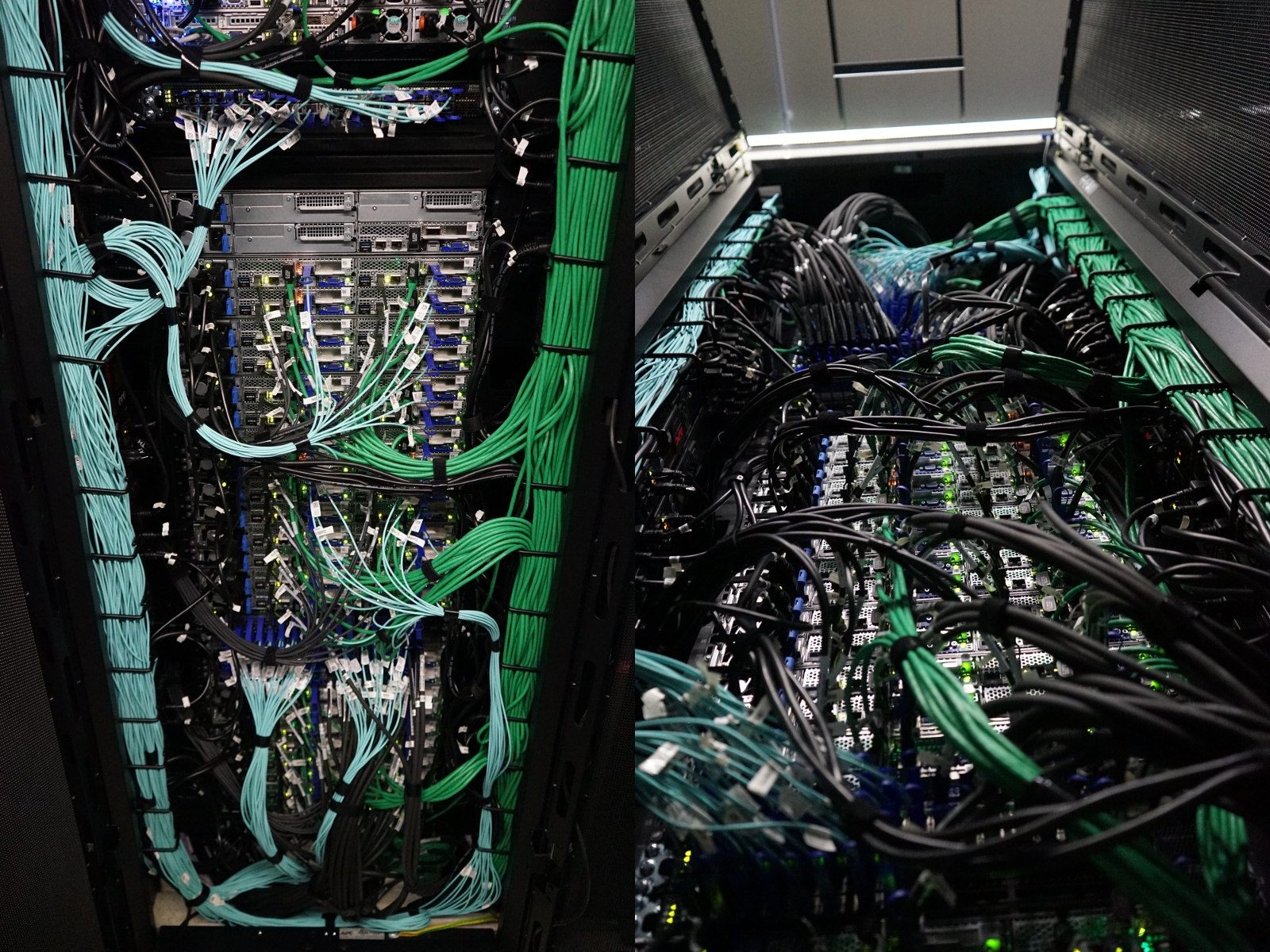

Networking

All our systems within Iris and Aion, as well as the shared parallel file systems, are interconnected using a fast and low-latency Infiniband network:

- HDR (200Gbps) for Aion, and

- EDR (100Gbps) for Iris.

Each cluster disposes of its fat-tree topology with a low blocking factor ratio (1:2 for Aion, 1:1.5 for Iris). This ensures optimal performance for internal communications between compute nodes for parallel computing jobs.

Our infrastructure is also connected using traditional Ethernet networking technologies, mainly based on Cisco equipment. This is needed, in order to access external resources on the Internet or on the University Network, and internally for all the regular network services. Our network is interconnected to the Internet and to the University internal network using 4x 40Gbps links.

Our Ethernet topology is composed of two layers and is designed to cover our whole datacenter rooms and accommodate future evolutions, expansions of the infrastructure and integration of new clusters. It is composed of two layers:

- A redundant and highly-available gateway layer, operating on layer 2 and 3 and performing the routing, filtering and aggregation of other network equipments;

- A switching layer operating on layer 2 and connecting all the servers and compute nodes in at least 10 Gbps (up to 100 Gbps).

References

For a more exhaustive and formal description of the infrastructure, you may refer to:

- hpc-docs.uni.lu – the technical documentation of the University of Luxembourg HPC platform

- Management of an Academic HPC Research Computing Facility: The ULHPC Experience 2.0, In 6th High Performance Computing and Cluster Technologies Conference (HPCCT 2022)

Aggregating and Consolidating two High Performant Network Topologies: The ULHPC Experience, In ACM Practice and Experience in Advanced Research Computing (PEARC’22)